7 minutes

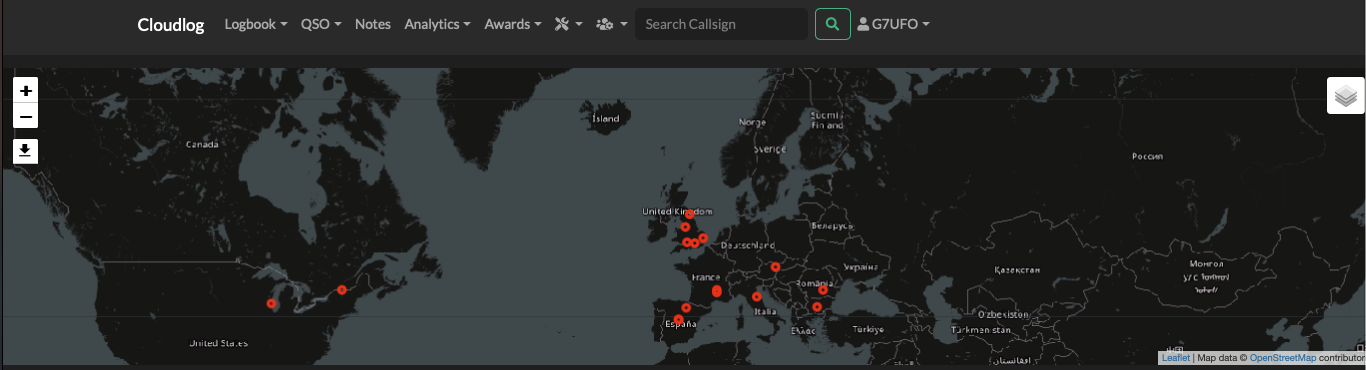

How I run Cloudlog

This is one of the admittedly not too uncommon occasions in amateur radio where what I do for a living intersects with one of my hobbies. I’ve been working in ‘development and cloud infrastructure’ for a while now and tend to favour containers to run my workloads.

I’ve tried a few logging systems and Cloudlog is the one which I’ve settled on for the last two or three years. It’s an excellent piece of software and is under constant development by Peter, 2M0SQL and many contributors.

I’m not going to go into detail here about the many reasons you should consider using it, there are plenty of other articles for that. This is a technical article which does require some basic knowledge about running a service in Linux. If this isn’t for you I’d recommend purchasing a subscription and let Peter run and maintain it for you.

The Fun Part

OK, to the fun part! Lets start with some basic assumptions. You have:

- Some basic Linux knowledge

- A server with Docker (and the compose addon) installed on it

- A connection to the internet

- Access to DNS settings for the domain name you’re planning to use (eg:

log.m9abc.radio).

I’ve used this setup in one variation or another for a couple of years now without any issues, however:

I’m not to be held responsible for any data loss, servers catching fire or bad signal reports as a result of setting up or using this setup.

If you’re happy with all that, lets start!

Background

Until recently there was an official Docker image for Cloudlog but due to the amount of support requests this created, it was removed. I used to use this image as a basis for my own which also added a helper to make configuration easier (and the container truly disposable).

The source to produce the image can be found in my repo and each time a new version of Cloudlog is released I build an image which will end up in this repository.

I’ve re-used the base of what was the official Docker image (as I’ve got not got much experience with PHP).

The original image ran Cloudlog as it would on a non containerised fashion. After starting up for the first time you would run through the in-app install steps.

I much prefer my containers to be configured at runtime (see 12 Factor App - III) so each time my Cloudlog container is started it writes to the configuration files based on environment variables provided. This will make sense when we configure it.

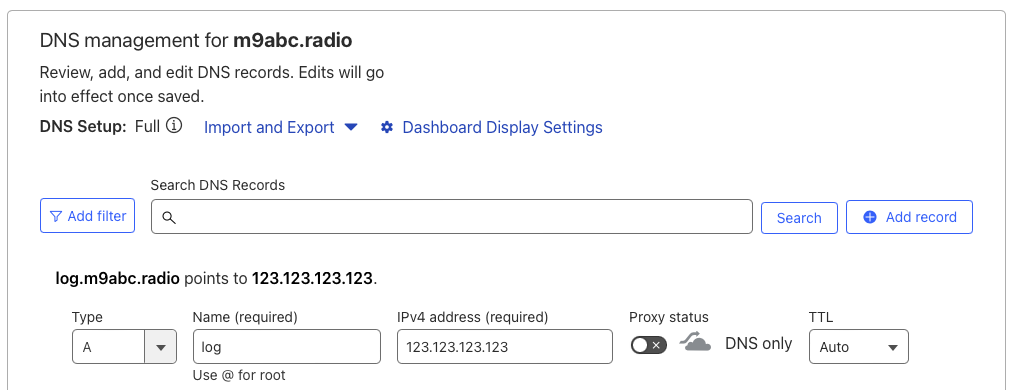

DNS

I use Cloudflare for my DNS but any can be used. Lets start by configuring DNS to point at our server.

If you’re self-hosting this you should either use a Dynamic DNS server or if you’re lucky and have a static IP, hook that up. You’ll also need to setup port forwarding as well. An alternative to self hosting is setting up a VPS somewhere like DigitalOcean.

For this example lets use the domain log.m9abc.radio as our host and 123.123.123.123 as our IPv4 address.

Note for Cloudflare, initially the ‘Proxy’ feature should be turned off as we’ll be using LetsEncrypt to grab an SSL cert. It can be turned on afterwards if you like.

Docker Compose Configuration

Create a new docker-compose.yml file and lets add services section by section.

For the sake of this walkthrough we’ll be installing v2.6.9 of Cloudlog.

Caddy

This will be our web server which will handle requests to the Cloudlog container.

The great feature of Caddy is that it will also magically request and renew Let’s Encrypt TLS (SSL) certificates for us.

services:

caddy:

container_name: caddy-gen

image: wemakeservices/caddy-gen:latest

restart: unless-stopped

ports:

- 443:443

- 80:80

volumes:

- /var/run/docker.sock:/tmp/docker.sock:ro

- caddy:/data/caddy

The last line caddy:/data/caddy references a Docker volume, we’ll define those in the final step.

Database

We’re using MariaDB which is fully compatible with MySQL.

Please update the MARIADB_DATABASE and MARIADB_ROOT_PASSWORD values!

mariadb:

container_name: mariadb

image: mariadb:10.11

restart: unless-stopped

environment:

MARIADB_DATABASE: cloudlog

MARIADB_ROOT_PASSWORD: supersecretrootpassword

volumes:

- mariadb:/var/lib/mysql

We do need to perform some initial setup on the database before we run any of the other services. This is only required before the first run.

- Start the mariadb container.

- Create our user.

- Set permissions for our user against our

cloudlogdatabase. - Grab the database schema for the version of Cloudlog we’re running. This will match the version we’re installing (in this case, v2.6.9).

- Create the schema in the

cloudlogdatabase. - Remove the schema file we downloaded.

- Stop the mariadb container.

From your console:

docker compose up -d mariadb

echo "CREATE USER 'm9abc'@'%' IDENTIFIED BY 'supersecretlogpassword'" | docker compose exec -T mariadb mariadb -uroot -psupersecretrootpassword

echo "GRANT ALL PRIVILEGES ON cloudlog.* TO 'm9abc'@'%'" | docker compose exec -T mariadb mariadb -uroot -psupersecretrootpassword

curl -O install.sql https://raw.githubusercontent.com/magicbug/Cloudlog/2.6.7/install/assets/install.sql

cat install.sql | docker compose exec -T mariadb mariadb -uroot -psupersecretrootpassword

rm install.sql

docker compose down

Cloudlog

cloudlog:

container_name: cloudlog

image: ghcr.io/g7ufo/cloudlog:2.6.7

restart: unless-stopped

environment:

LOCATOR: IO94XX

BASE_URL: https://log.m9abc.radio/

CALLBOOK: qrz

CALLBOOK_USERNAME: m9abc

CALLBOOK_PASSWORD: supersecretcallbookpassword

DATABASE_HOSTNAME: mariadb

DATABASE_NAME: cloudlog

DATABASE_USERNAME: m9abc

DATABASE_PASSWORD: supersecretlogpassword

DATABASE_IS_MARIADB: yes

DEVELOPER_MODE: no

volumes:

- cloudlog-eqsl:/var/www/html/images/eqsl_card_images

- cloudlog-backup:/var/www/html/backup

- cloudlog-uploads:/var/www/html/uploads

labels:

virtual.host: log.m9abc.radio

virtual.port: 80

virtual.tls: [email protected]

As you can see we’re into configuration now.

environment

This section provides values for for Cloudlog configuration and is picked up by my helper script.

Most of these are self explanatory.

DEVELOPER_MODE: As it suggests it disables developer mode. Set this to “yes” if you’d like to enable. Not specifying this in the file will default to “no”.CALLBOOK: Can beqrzorhamqthonly. TheCALLBOOK_USERNAMEandCALLBOOK_PASSWORDshould be set appropriately.DATABASE_HOSTNAME: Because we’re running MariaDB in this file we can refer to it as its service name, which ismariadb.DATABASE_NAME: This should match the value in themariadbserviceMARIADB_DATABASE.DATABASE_USERNAMEandDATABASE_PASSWORD: set as we did above.DATABASE_IS_MARIADB: We’re using MariaDB so the helper script will make a minor adjustment to be compatible.

labels

This section configures Caddy to serve content from Cloudlog and also to go and grab a Let’s Encrypt certificate for your log domain.

virtual.host: This should be the domain of your log which we set up in the DNS section above. Do not includehttps://.virtual.tls: This will need to be a valid email address.

Cron Jobs

cron:

container_name: ofelia-cron

image: mcuadros/ofelia:latest

depends_on:

- cloudlog

command: daemon --docker

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

labels:

ofelia.job-local.dummy.schedule: "@weekly"

ofelia.job-local.dummy.command: date

Alongside this we also need to add the following to the labels section of the cloudlog service:

ofelia.enabled: true

ofelia.job-exec.dummy-cl.schedule: "@every 60m"

ofelia.job-exec.dummy-cl.command: touch /tmp/cron-dummy

ofelia.job-exec.upload-qsos-clublog.schedule: 0 */6 * * *

ofelia.job-exec.upload-qsos-clublog.command: curl --silent http://localhost/index.php/clublog/upload

ofelia.job-exec.upload-qsos-lotw.schedule: 0 */1 * * *

ofelia.job-exec.upload-qsos-lotw.command: curl --silent http://localhost/index.php/lotw/lotw_upload

ofelia.job-exec.upload-qsos-qo100dx.schedule: 0 19 * * *

ofelia.job-exec.upload-qsos-qo100dx.command: curl --silent http://localhost/index.php/webadif/export

ofelia.job-exec.upload-qsos-qrz.schedule: 6 */6 * * *

ofelia.job-exec.upload-qsos-qrz.command: curl --silent http://localhost/index.php/qrz/upload

ofelia.job-exec.sync-qsos-eqsl.schedule: 9 */6 * * *

ofelia.job-exec.sync-qsos-eqsl.command: curl --silent http://localhost/index.php/eqsl/sync

ofelia.job-exec.update-lotw-users-db.schedule: "@weekly"

ofelia.job-exec.update-lotw-users-db.command: curl --silent http://localhost/index.php/lotw/load_users

ofelia.job-exec.update-lotw-users-activity.schedule: 10 1 * * 1

ofelia.job-exec.update-lotw-users-activity.command: curl --silent http://localhost/index.php/update/lotw_users

ofelia.job-exec.update-clublog-scp-database.schedule: "@weekly"

ofelia.job-exec.update-clublog-scp-database.command: curl --silent http://localhost/index.php/update/update_clublog_scp

ofelia.job-exec.update-dok.schedule: "@monthly"

ofelia.job-exec.update-dok.command: curl --silent http://localhost/index.php/update/update_dok

ofelia.job-exec.update-sota.schedule: "@monthly"

ofelia.job-exec.update-sota.command: curl --silent http://localhost/index.php/update/update_sota

ofelia.job-exec.update-wwff.schedule: "@monthly"

ofelia.job-exec.update-wwff.command: curl --silent http://localhost/index.php/update/update_wwff

ofelia.job-exec.update-pota.schedule: "@monthly"

ofelia.job-exec.update-pota.command: curl --silent http://localhost/index.php/update/update_pota

Volumes

volumes:

caddy:

cloudlog-eqsl:

cloudlog-backup:

cloudlog-uploads:

mariadb:

Remember I mentioned we’d set up volumes earlier? Well, here they are!

The Finished File

The finished file should look like the one I’ve prepared here.

Fire it up!

docker compose up -d

This will start all the services and you should then be able to access using your domain.

The default user is m0abc and password is demo.

Updating

Updating is as simple as updating the image version (ghcr.io/g7ufo/cloudlog:2.6.7 in the example above) to the new version and restarting with:

docker compose down

docker compose up -d

Caveat

If the configuration file has changed upstream it may fail to configure properly. This hasn’t happened yet and I will do my best to keep on top of this. If you find this is the case, please raise an [issue[(https://github.com/g7ufo/cloudlog/issues)] in the repo.

Disaster Recovery

I’m currently using a script to perform a database dump and create an archive of the three cloudlog volumes. I’m working on a better solution which I’ll share in the repo and on this blog.